How Shopify Handles The World's Biggest Flash Sales Without Exploding

by David Zhang, published Feb 7th, 2024

Background Info

Flash sales on Shopify aren't like those Black Friday stampedes you see IRL. Those are seasonal, whereas on Shopify, these shopping frenzies can happen at any time. For example, online brands will generate tons of hype for limited edition product drops and subsequently bombard Shopify servers with traffic when the product becomes available.

This means Shopify's architecture has to be robust and scalable, especially as the number of merchants grows.

Shopify's tech stack

- MySQL, Redis, Memcached for storing data

- Mainly Ruby on Rails for backend, tho some Go, Lua for performance critical components

- React and React Native with GraphQL on the frontend

Some other things to note:

- Main rails backend is a monolith that gets deployed 40 times per day

- Large percentage of the workforce is fully remote and globally distributed

Shopify Data Architecture

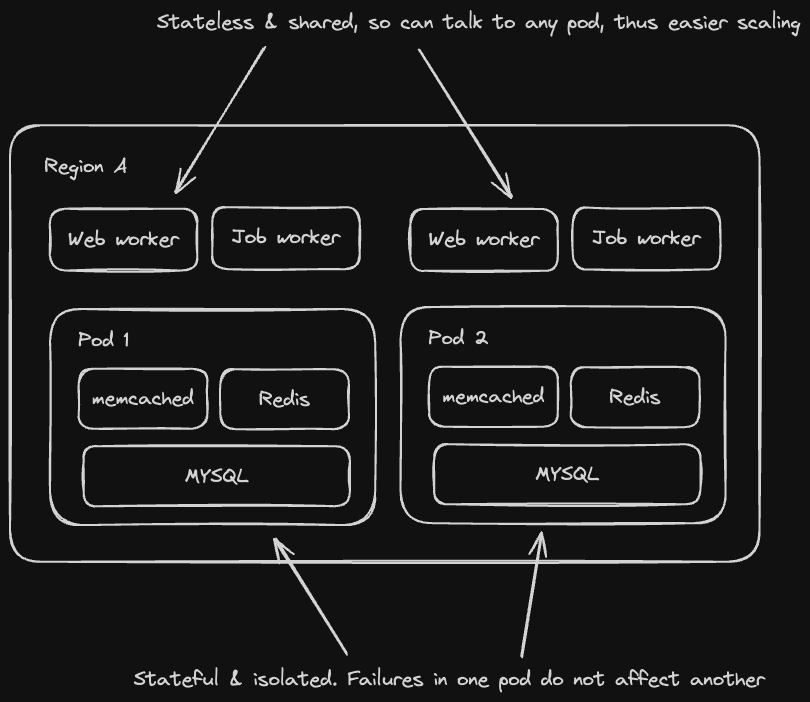

Shopify data is organized into pods. A pod contains data for one or more shops and is a "complete version of Shopify that can run anywhere in the world". Think of it like an enhanced data shard.

The pods themselves are stateful and isolated from one another. In contrast, the web workers and jobs that interact with them are stateless and can be shared. This means more web workers can be allocated to a particular pod during large traffic spikes (e.g. in the event of a flash sale).

Pods are also replicated across regions, with active pods in one region being inactive in another. This enables failovers in the event of catastrophic outages.

The lifecycle of a request

- A request comes in to some merchant's online store, e.g.

tshirthero.shopify.com - Request goes to OpenResty, an open source nginx distribution with Lua scripting support.

- Used for load balancing and bot/scalper detection.

- Request gets routed to the appropriate region where the pod corresponding to the shop is active

- Rails application running in that region receives the request and handles it

An Aside on Why Credit Card Payments Are A Bitch

"PCI-DSS", short for the Payment Card Industry Data Security Standard, sets a bunch of guidelines for securely storing and handling credit card information.

This presents a few problems for Shopify

- Rails app is a monolith being deployed 40 times a day. Auditing every time isn't feasible.

- Shops can be customized via HTML and Javascript, and Shopify Apps can add additional functionality to stores. Don't wanna expose any credit card info to that stuff.

The solution? Isolate credit card payment data from the frontend and the Rails monolith entirely.

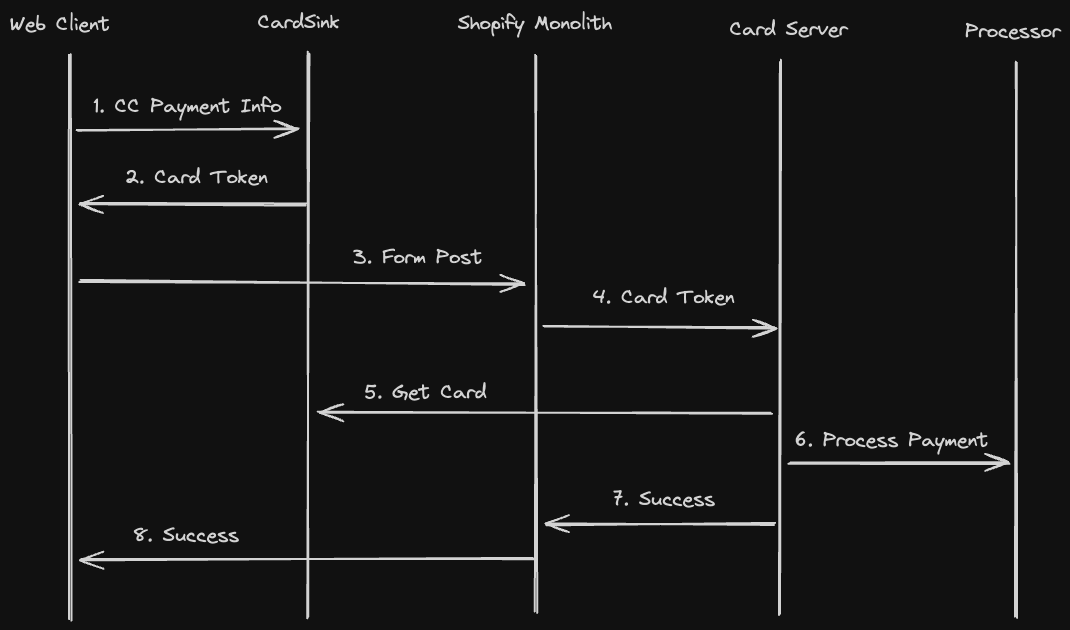

When a user makes a purchase, the following occurs:

- Credit card info is handled by a web form hosted in an iFrame, isolating it from the rest of the (potentially merchant-controlled) Javascript / HTML.

- The information is sent over to a service called CardSink, which encrypts and stores it, responding with a Card token back to the client

- The web client sends that Card token and order info to Shopify backend monolith

- The backend monolith calls another service called CardServer with the token and metadata.

- CardServer uses that token and metadata to decrypt the credit card info and uses it to send a request to the appropriate payment processor.

- Payment processor handles payment authorization, returns a success/declined response to the Shopify monolith, which converts it into an order.

Here's a nice diagram visualizing the request flow:

Idempotency

Furthermore, credit card transactions need to be idempotent, meaning multiple duplicate requests being sent and processed should yield the same result as sending and processing single request. This is for obvious reasons - if we double charge a credit card we get a pissed off customer.

Shopify does this by including an idempotency key, which is a unique key that the server uses to recognize subsequent retries of the same request. They also developed a library for creating idempotent actions, enabling devs to describe how to store state and retry requests.

Scaling Pods

Application code only operates on shops. It doesn't care what pods these shops correspond to, meaning pods can be horizontally scaled to handle more load. However, as individual shops grow, pods need to be rebalanced to better utilize system resources.

A shop is moved from one pod to another via the following process:

- The MySQL rows corresponding to that shop's existing data is just copied over to another MySQL living in another pod.

- New incoming data is replicated via a "bin log", which is a stream of events for every row that is modified.

- Shopify open sourced a tool for doing this called Ghostferry, which is written in Go

- Once everything is in sync, the shop is write-locked, with incoming writes being queued and Redis jobs being migrated.

- Once that's done, the route table gets updated and the lock is removed.

- The shop data on the old pod is deleted asynchronously.

This whole process is super fast, with less than 10 seconds of downtime on average, with less than 20 seconds for larger stores.

Scaling The Storefront Rendering Layer

The rendering logic was abstracted out of the old rails monolith and was rewritten as a new rails application completely from scratch. This enabled it to scale independently from the other parts of Shopify. OpenResty routing and Lua scripting ensured this process was completed with no downtime.

Over the past few years, there's also been a rise of "headless" commerce, reducing the rendering load for Shopify. Tech-savvy merchants are starting to roll out their own frontends as single page React applications.

Load Testing

Load-testing is done with an internal tool called Genghis, which spins up a bunch of worker VMs that execute Lua scripts. Lua scripts can describe end to end user behavior, like browsing, adding to cart, end checking out.

Genghis is run weekly against benchmark stores saved in every pod. It's also run against a CardServer which forwards these requests to a benchmark gateway (written in Go), to prevent spamming payment processors. The benchmark gateway responds with both successful and failed payments, with a distribution of latencies mimicking that of real production traffic.

Resiliency

Shopify also created a resiliency matrix which describes the dependencies of their system and possible failures that can occur. They use this to plan gamedays, where they simulate outages to check if their alerting mechanisms fire and if the right SOPs are in place.

Shopify also uses circuit breakers to improve service uptime and prevent cascading failures. They use an internally developed library called Semian to implement these in Ruby's HTTP client.

In addition, Shopify developed a tool called Toxiproxy, which enables service failures and latency to be incorporated into unit tests. Toxiproxy consists of a TCP proxy written in Go and a client communicating with the proxy over HTTP. Developers can then simulate latency and failures by routing all connections through Toxiproxy.